This is the second part of my Internet-of-Things telemetry project based on Azure.

The source of the project is hosted in the azure-veneziano GitHub repository.

Here are the other parts:

In this article I’ll show you how to setup some components of Windows Azure, in order to make the system working.

I won’t cover details such as “how to subscribe to the Azure platform” or similar. Please, consider the several posts around the web, that describes very well how to walk the first steps, as well the benefits coming from the subscription.

A good place to start is here.

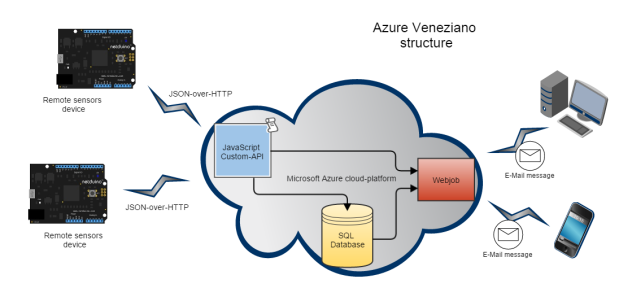

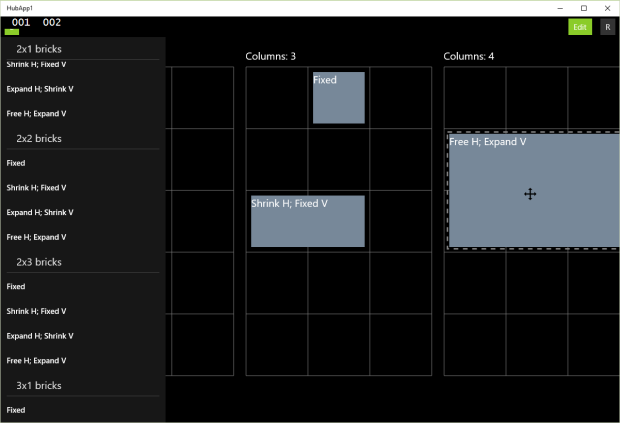

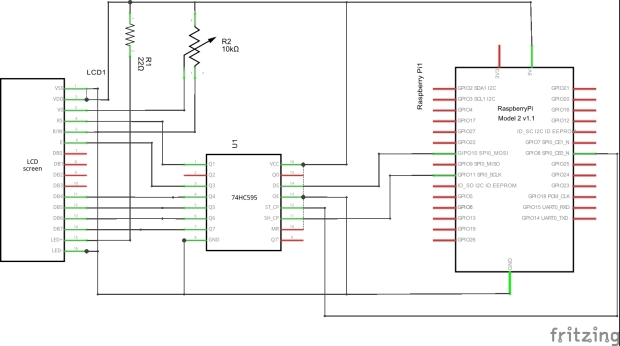

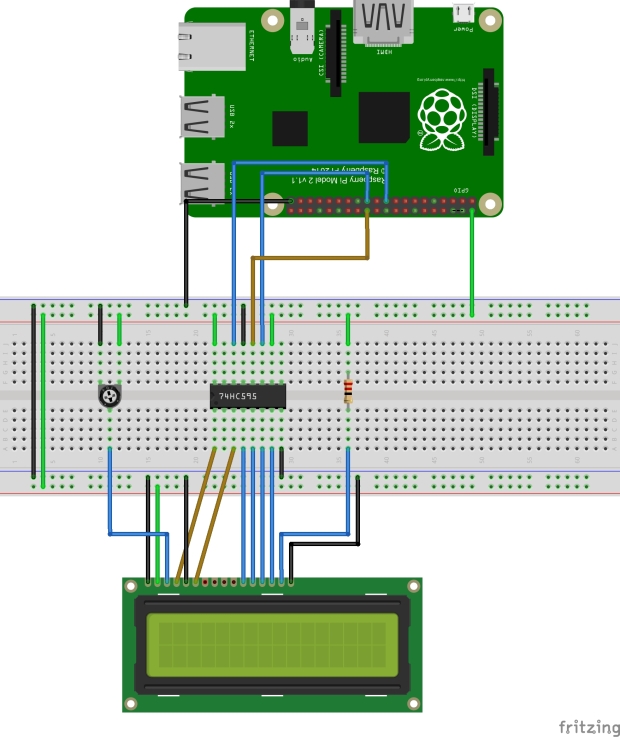

The system structure more in depth.

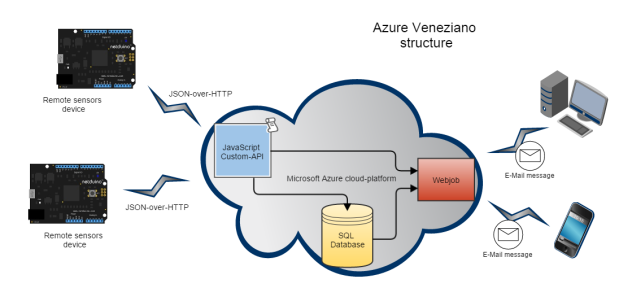

In the previous article there is almost no description about the system structure, mainly because the post is focused on the device. However, since here the key-role is for Azure, it’s better to dig a bit in depth around what’s the target.

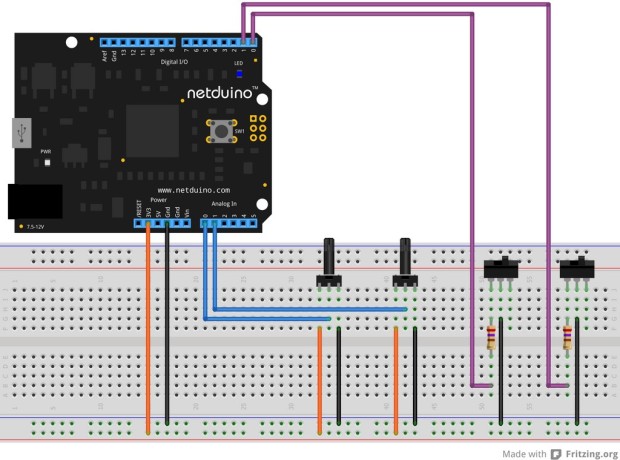

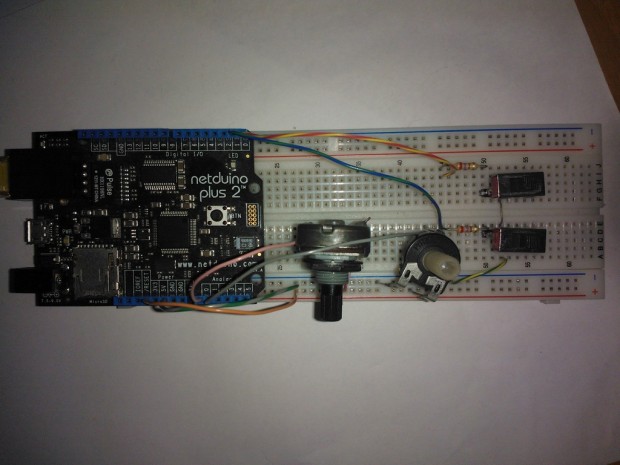

On the left there are a couple of Netduinoes as symbol of a generic, small device which interfaces with sensors, collects some data, then sends them to the Azure platform. This section is covered in the first part of the series.

The JSON-over-HTTP data sent by any device are managed by a “custom API” script within the Azure’s “Mobile Services” section. Basically a Node.JS JavaScript function which is called on every device’s HTTP request.

This script has two major tasks to do:

- parse the incoming JSON data, then store them into a SQL database;

- “wake-up” the webjob, because new data should be processed.

The database is a normal Azure SQL instance, where only two simple tables are necessary for this project. One is for holding the current variables state, that is every single datum instance incoming from any device. The other table depicts the “history” of the incoming data, that is the evolution of the state. This is very useful for analysis.

Finally, there is the “webjob”.

A webjob could be seen as a service or, more likely, as a console application. You can put (almost) anything into this .Net app, then it can started anytime. What I need is something like a endlessly running app, but in a “free-context” this service is shut-down after 20-30 minutes. That’s the way I used a trick to “wake it up” using kinda trigger from the script. Whenever new data are incoming the app is started, but can stay stopped whenever nothing happens.

The webjob task is just sending a mail upon a certain condition is met. In this article I won’t show anything sophisticated, than a very short plain-text mail. The primary goal here is setting up the Azure platform, and testing the infrastructure: in the next articles we’ll add several pieces in order to make this project very nice.

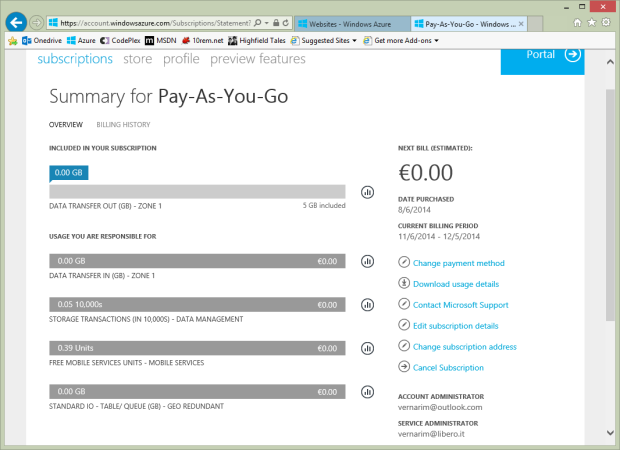

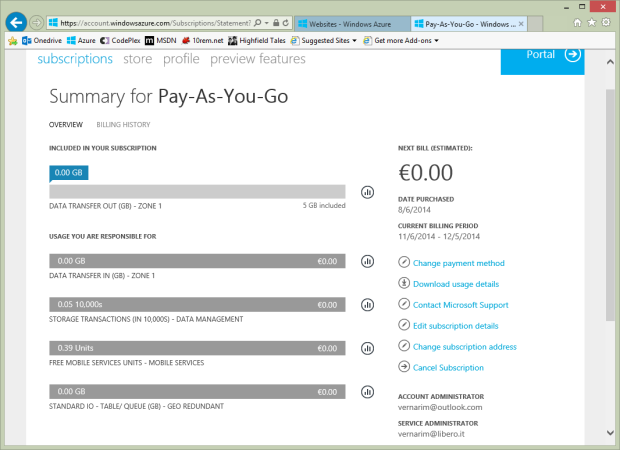

Looks nice, but…how much does cost all that?

Just two words about the cost of the Azure platform.

Entering into the Azure portal is much like as walking in Venezia: full of intriguing corners, each one different from others, and always full of surprises. The platform is really huge, but surprisingly simple to use.

I say that I was surprised, because you’ll be also surprised by realizing that many stuffs come for FREE. Unless you want to scale up (and get more professional) this project, your bill will stick to ZERO.

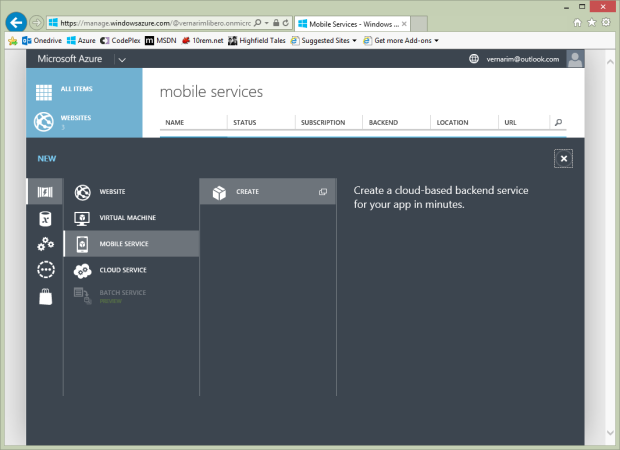

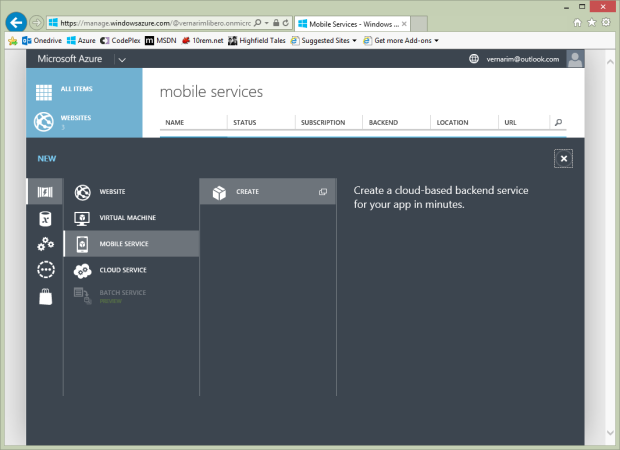

Setup the mobile service.

The Mobile Services are the most important components in order to interface any mobile device. The “mobile” term is rather oriented to devices like phones or small boards, but the services could be accessed even from a normal PC.

The first thing to do is create your own mobile service: this task couldn’t be more easy…

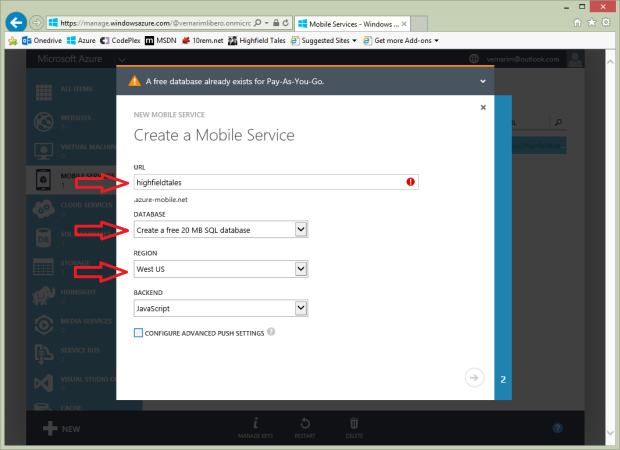

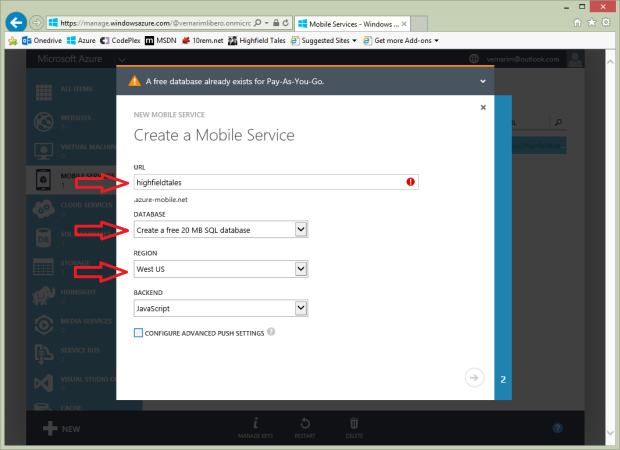

Type in your favorite service name, which has to be an unique identifier worldwide (as far I know).

About the database, ensure to pick the “Create a free 20 MB SQL database” (if you don’t have one yet), and the wizard will create automatically for you.

Two more parameters: select the closest region to you to host the service, then choose “JavaScript” as backend language for the management.

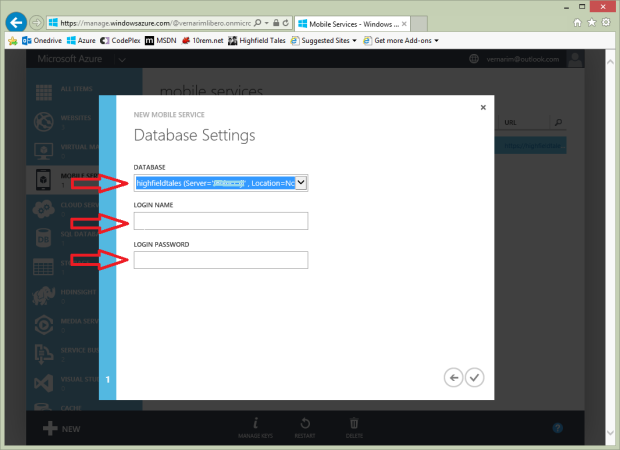

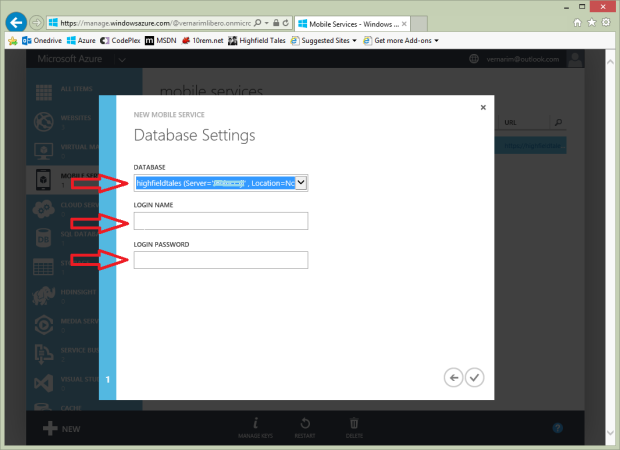

If you are creating a new database, you’ll face a second page in the wizard. Simply you have to specify the credentials to use to gain access to the database.

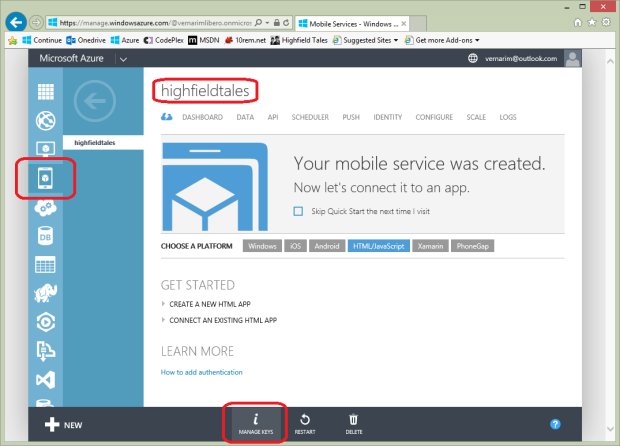

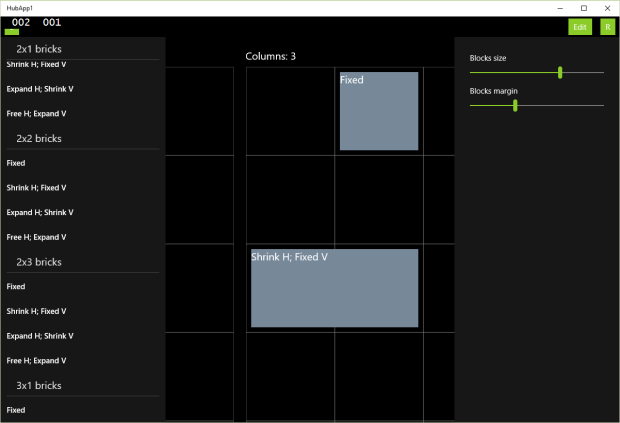

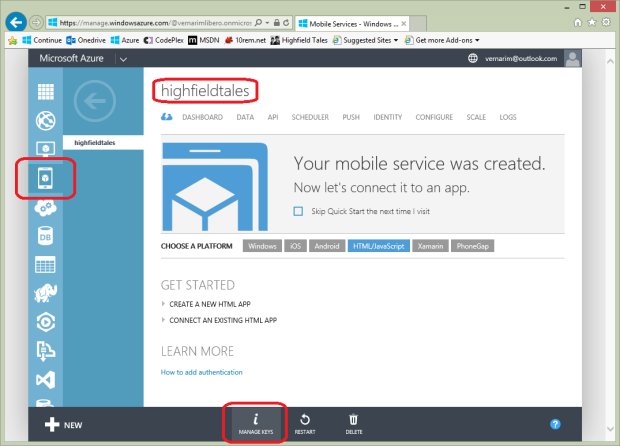

That’s all: within a few your brand new mobile service should be ready. The below sample view gives an overview about the service.

Please, notice that there are links where you can download sample apps/templates already configured with your own parameters!…Dumb-proof!

Also have a look at the bottom toolbar, where a “manage keys” button pops up some strange strings. Those strings are the ones that you should specify in the Netduino (and any other device) in order to gain access to the Azure Mobile Service.

public static void Main()

{

//istantiate a new Azure-mobile service client

var ms = new MobileServiceClient(

"(your service name)",

applicationId: "(your application-id)",

masterKey: "(your master key)"

);

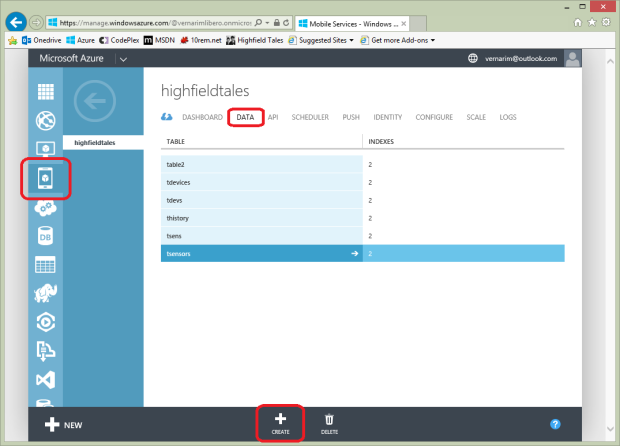

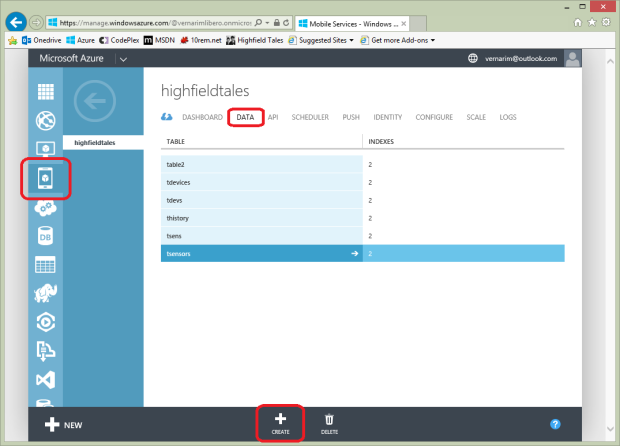

The next task to do is about creating the database tables.

We need just three tables, and (even surprising) we don’t need to specify any column-schema: it will created automatically upon the JSON structure defined in the Netduino device software. This feature is by default, but you can disable it in the “configure” section, with the “dynamic schema” switch.

| Table name |

Purpose |

| tdevices |

Each record is paired to a remote device and holds identification and status data of it. |

| tsensors |

Each record is paired to a “variable” defined by a certain device somewhere and holds identification and status data of it. |

| thistory |

Each record stores the value of a certain variable at the time it arrives on the server, or marks an event occurred. Think the table as a queue, where you can query the records in order to depict a certain variable’s value evolution over time. |

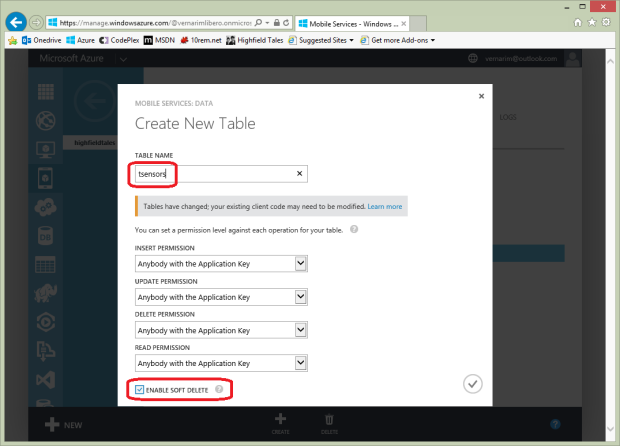

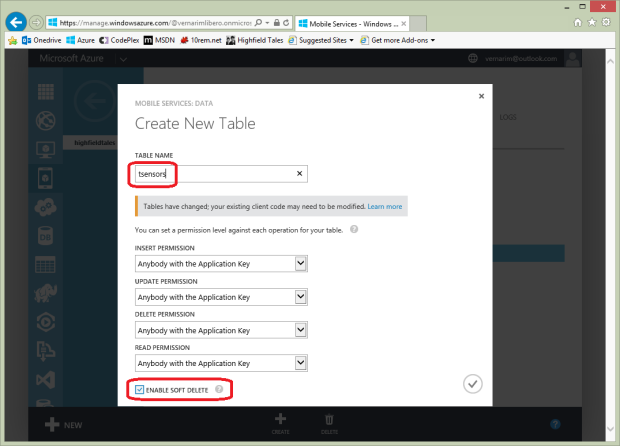

Press “create” and enter “tsensors”, then ensure checked the “enable soft delete” and confirm. Repeat the same for both the “tdevices” and the “thistory” tables, and your task is over.

The “soft delete” feature marks a record as “deleted” and keeps it, instead of removing from the table. You should enable this feature when you deal with concurrency. I personally think it is useful even for a simple dubugging. The problem is that is up to you “cleaning” the obsolete records.

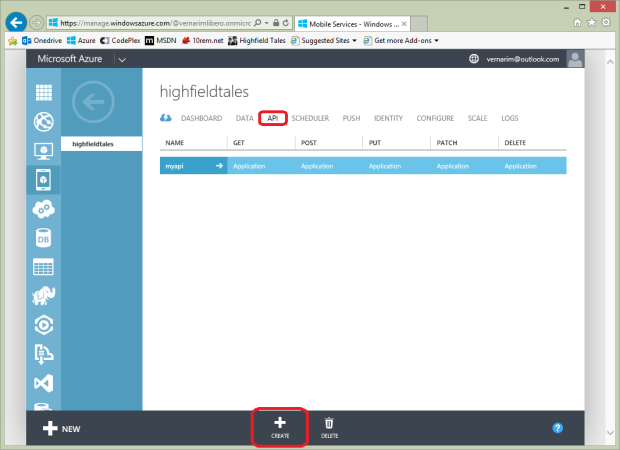

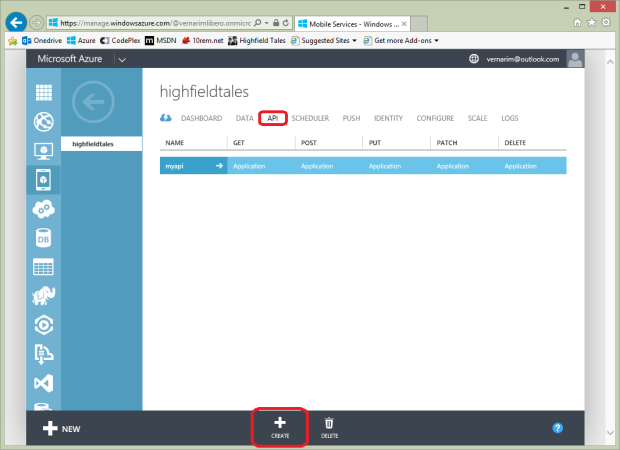

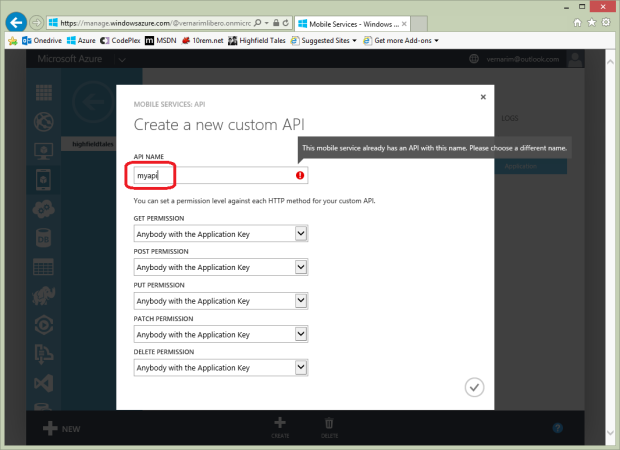

The last section to setup within the Mobile Service context is the “Custom API“, that is the code to run upon any incoming data request.

Simply select the “API” section, then press “create”.

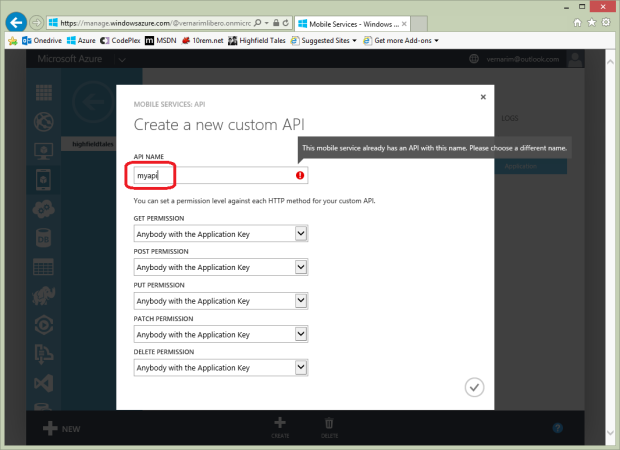

The wizard will ask you the name of the new API, as well as the permission grants to access it.

Back to the Netduino code, the API’s name should be specified on any request.

//execute the query against the server

ms.ApiOperation(

"myapi",

MobileServiceClient.Create,

jobj

);

Technically speaking, the name is the very last segment of the URI path which maps the request against Azure.

http://{your-service-name}.azure-mobile.net/api/{your-api-name}

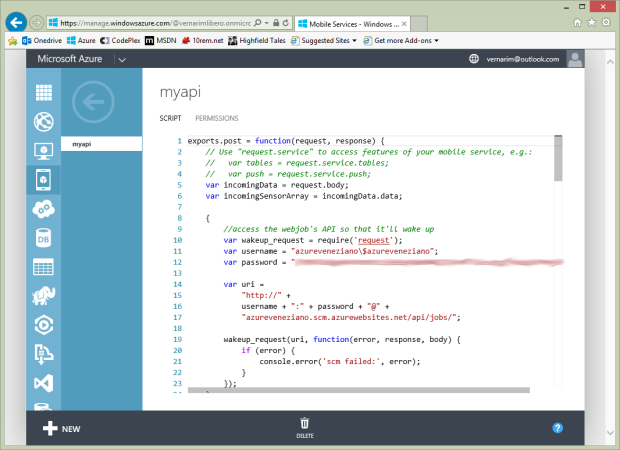

At this point you can begin to type the script in.

The device-side entry-point for the data.

The handler for the incoming requests is just a JavaScript function. Better: one function per HTTP method. However, since the primary goal is pushing data from a device into the server, the method used is POST (CREATE, in the REST terminology) all the times.

The JavaScript environment comes with Node.Js, which is very easy yet compact to use. I’m NOT a JavaScript addict, but honestly I didn’t have much effort in coding what I wanted.

The “script” section of the API allows to edit your script as you were on Visual Studio. The only missing piece is the Intellisense, but for JavaScript I don’t need it actually.

The script we need is structured as follows:

exports.post = function(request, response) {

// section: wake-up the webjob

// section: update/insert the device's info into the "tdevices" table

// section: update/insert the device's data into the "tsensors" table

// section: append the device's data to the "thistory" table

};

Let’s face the database updating first.

For the “tdevices” table the script is as follows:

var devicesTable = request.service.tables.getTable("tdevices");

var sensorsTable = request.service.tables.getTable("tsensors");

var historyTable = request.service.tables.getTable("thistory");

//update/insert the device's info record

devicesTable

.where({

devId: incomingData.devId

}).read({

success: function(results) {

var deviceData = {

devId: incomingData.devId,

version: incomingData.ver

};

var flush = false;

if (results.length > 0) {

//We found a record, update some values in it

flush = (results[0].version != deviceData.version);

results[0].devId = deviceData.devId;

results[0].version = deviceData.version;

devicesTable.update(results[0]);

//Respond to the client

console.log("Updated device", deviceData);

request.respond(200, deviceData);

} else {

//Perform the insert in the DB

devicesTable.insert(deviceData);

//Reply with 201 (created) and the updated item

console.log("Added new device", deviceData);

request.respond(201, deviceData);

}

manageSensorTable(flush);

}

});

As the data come in, the first thing is to look for the correspondent existent entry in the “tdevices” table, using the device’s identification as key. If the record does exist, it will be “updated”, otherwise a new entry will be added.

Upon an update, the logic here is comparing the incoming “configuration” version with the corresponding value stored in the table. If they don’t match, the “flush” flag is set, which serves to the next step to remove all the obsolete “sensor” entries.

When the operation on the “tdevices” table is over, begins the one on the “tsensors” and the “thistory” tables.

As in the previous snippet, first there is a selection of the records of “tsensors” marked as owned by the current device identifier. Then, if the “flush” flag is set, all the records are (marked as) deleted.

Finally, the data contained in the incoming message are scanned one item at once. For each variable, it looks for the corresponding entry in the recordset, then either update it or add a new record if wasn’t found.

Any item present in the message is also appended “as-is” to the “thistory” table.

//update/insert the device's data record

function manageSensorTable(flush) {

sensorsTable

.where({

devId: incomingData.devId

}).read({

success: function(results) {

if (flush) {

//flush any existent sensor record related to the involved device

console.log("Flush sensors data");

for (var i = 0; i < results.length; i++) {

sensorsTable.del(results[i].id);

}

}

for (var i = 0; i < incomingSensorArray.length; i++) {

var sensorData = {

devId: incomingData.devId,

name: incomingSensorArray[i].name,

value: incomingSensorArray[i].value

};

//find the index of the related sensor

var index = flush ? 0 : results.length;

while (--index >= 0) {

if (results[index].name == sensorData.name)

break;

}

if (index >= 0) {

//record found, so update some values in it

results[index].devId = sensorData.devId;

results[index].name = sensorData.name;

results[index].value = sensorData.value;

sensorsTable.update(results[index]);

} else {

//Perform the insert in the DB

sensorsTable.insert(sensorData);

}

//insert the record in the historian table

historyTable.insert(sensorData, {

success: function() {

//do nothing

}

});

}

}

});

}

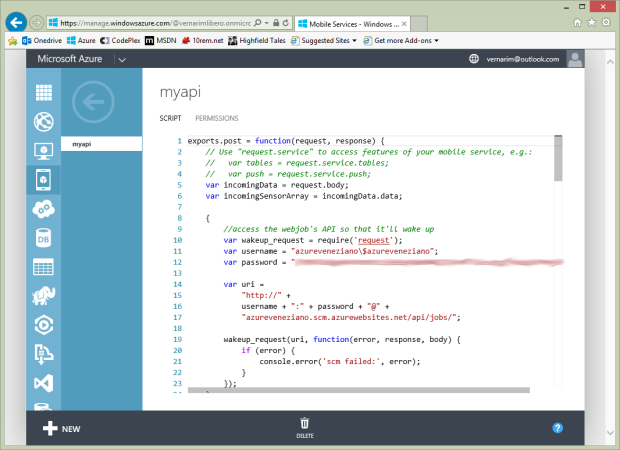

The last but not least piece of script is for waking up the webjob.

Please, note that my usage of the webjob is rather uncommon, but I think it’s the best compromise. The trade is between the Azure “free-context” limitations, and the desired service availability. The result is a webjob configured as “running continuously”, but is shut down by the platform when there’s no external “stimulation”. The trick is to “wake up” the webjob only when necessary by invoking a fake call to its site.

Have a look at my question on StackOverflow on how to solve the problem.

{

//access the webjob's API so that it'll wake up

var wakeup_request = require('request');

var username = "azureveneziano\$azureveneziano";

var password = "(web-site-password)";

var uri =

"http://" +

username + ":" + password + "@" +

"azureveneziano.scm.azurewebsites.net/api/jobs/";

wakeup_request(uri, function(error, response, body) {

if (error) {

console.error("scm failed:", error);

}

});

}

At the end, it’s a trivial dummy read to the webjob deployment site. This read wakes up or keeps awaken the webjob.

Please, notice that all the “console” calls are useful only during the debugging stage: you should remove them when the system is stable enough.

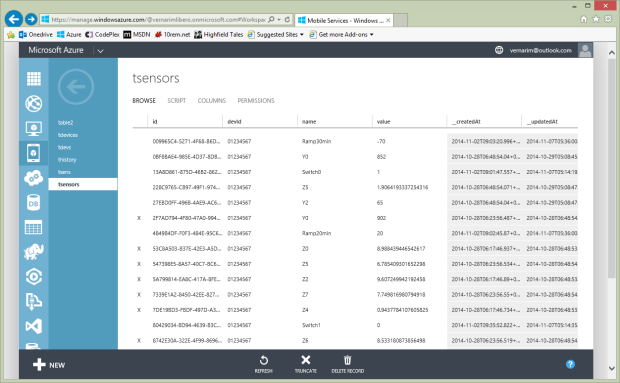

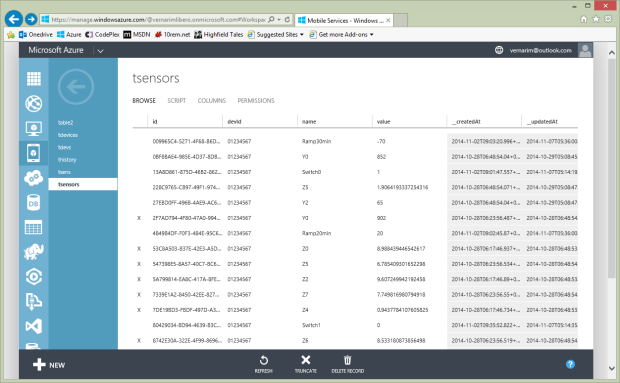

If everything goes well, the Netduino should send some data to the Azure API, and the database should fill.

Here is an example of what the “tsensors” table may contain:

Creating and deploying the webjob.

To understand what a “webjob” is, I suggest to read the Scott Hanselman’s article.

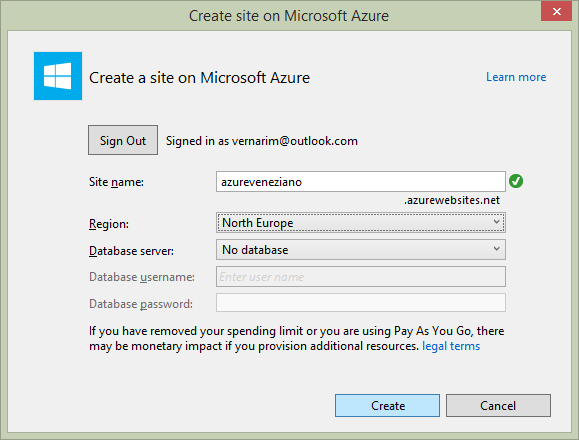

Since a webjob is part of a web-site, you must create one first. Azure offers up to 10 web-sites for free, so that isn’t a problem. At the moment, I don’t use any “real” web-site (meaning pages), but I need the registration.

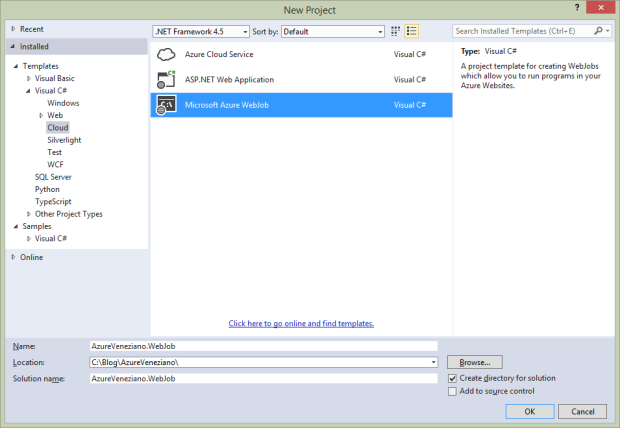

The procedure of registration, deployment and related task can be easily managed from within Visual Studio.

When I started the project I used Visual Studio Express 2013 for Web, and the Update 4 CTP allowed such a management. Since a few days, there’s another great alternative: Visual Studio 2013 Community, which comes out with Update 4 released, but offers also a lot of useful features.

The following snapshots were taken on the Express release, but should be similar on other editions.

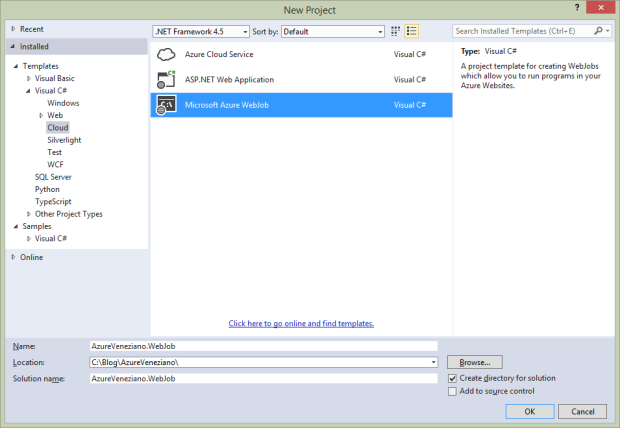

Start Visual Studio and create a “Microsoft Azure Webjob” project, and give it the proper name.

As you may notice, the solution composition looks almost the same as a normal Console application.

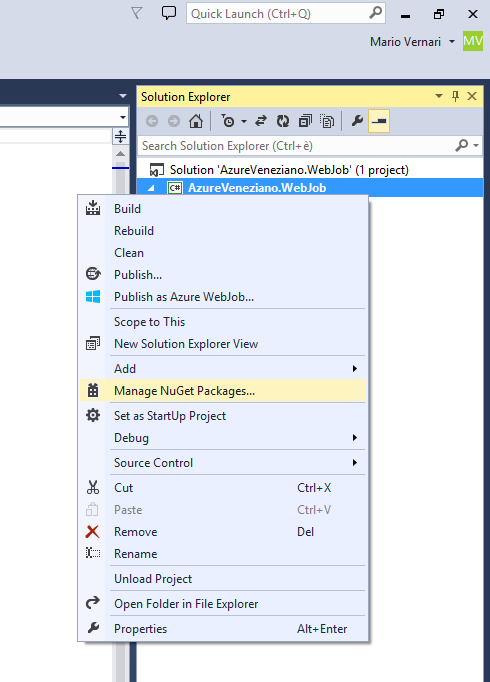

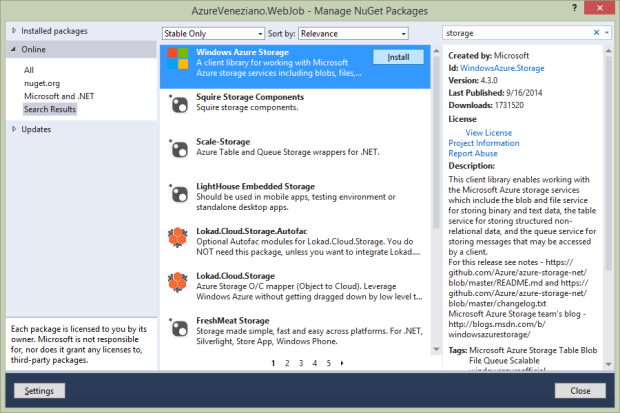

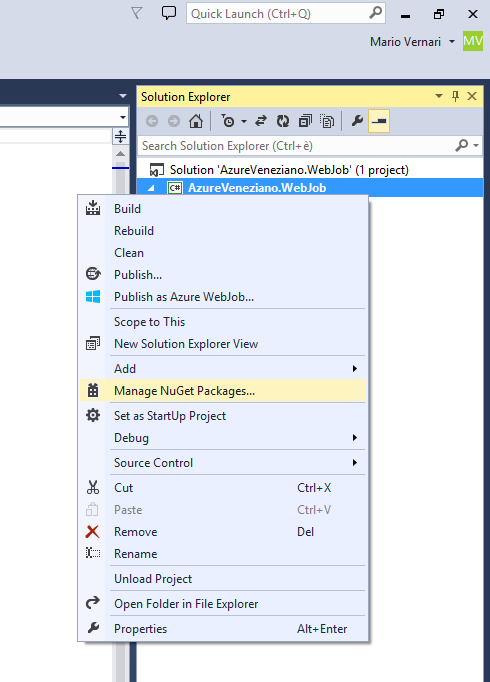

In order to add the proper references, just choose the “Manage NuGet packages” from the project’s contextual menu.

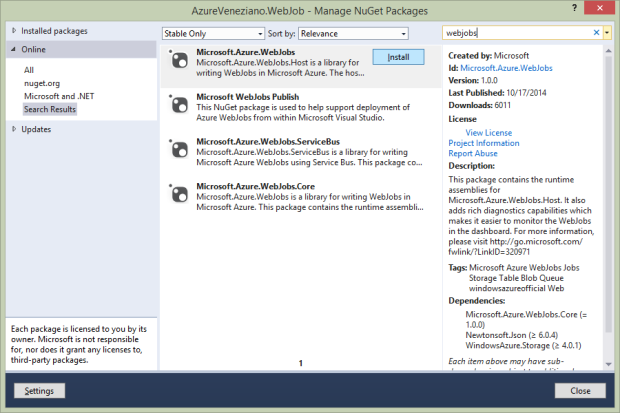

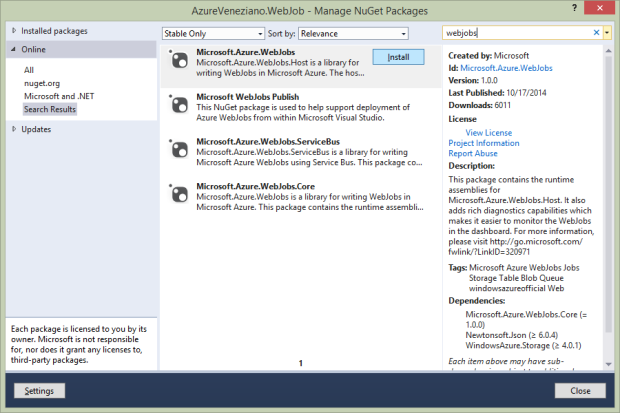

Firstly install the base “Microsoft.Azure.Webjobs” package as follows:

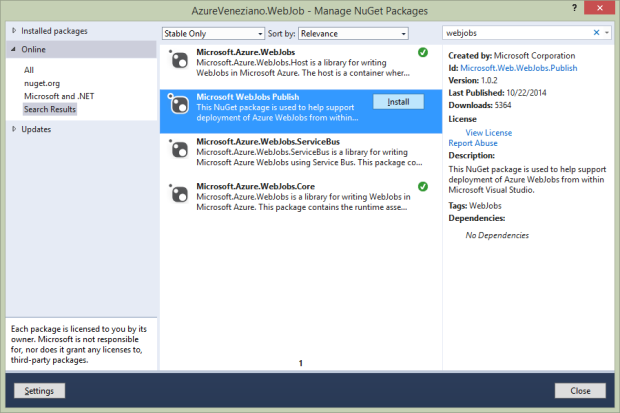

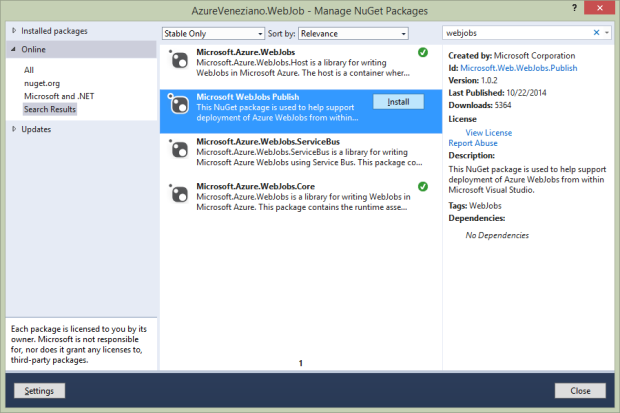

Then install the “Microsoft Webjobs Publish” package:

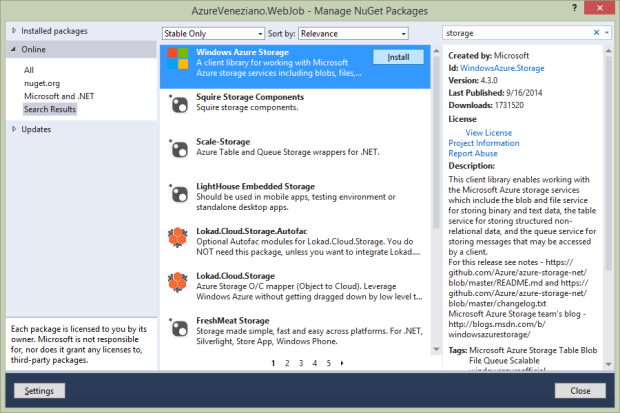

Finally install the “Windows Azure Storage” package:

Since this webjob will “run continuously”, but will be actually shut down often, the very first thing to add to the code is a procedure for detecting the shutting request, so that to exit the application gracefully.

This piece of code isn’t mine, so I invite to read the original article by Amit Apple about the trick.

#region Graceful-shutdown watcher

/**

* Implement the code for a graceful shutdown

* http://blog.amitapple.com/post/2014/05/webjobs-graceful-shutdown/

**/

//get the shutdown file path from the environment

string shutdownFile = Environment.GetEnvironmentVariable("WEBJOBS_SHUTDOWN_FILE");

//set the flag to alert the incoming shutdown

bool isRunning = true;

// Setup a file system watcher on that file's directory to know when the file is created

var fileSystemWatcher = new FileSystemWatcher(

Path.GetDirectoryName(shutdownFile)

);

//define the FileSystemWatcher callback

FileSystemEventHandler fswHandler = (_s, _e) =>

{

if (_e.FullPath.IndexOf(Path.GetFileName(shutdownFile), StringComparison.OrdinalIgnoreCase) >= 0)

{

// Found the file mark this WebJob as finished

isRunning = false;

}

};

fileSystemWatcher.Created += fswHandler;

fileSystemWatcher.Changed += fswHandler;

fileSystemWatcher.NotifyFilter = NotifyFilters.CreationTime | NotifyFilters.FileName | NotifyFilters.LastWrite;

fileSystemWatcher.IncludeSubdirectories = false;

fileSystemWatcher.EnableRaisingEvents = true;

Console.WriteLine("Running and waiting " + DateTime.UtcNow);

#endregion

At this point you might add some blocking code, and test what happens. As in the Amit’s article:

// Run as long as we didn't get a shutdown notification

while (isRunning)

{

// Here is my actual work

Console.WriteLine("Running and waiting " + DateTime.UtcNow);

Thread.Sleep(1000);

}

Console.WriteLine("Stopped " + DateTime.UtcNow);

Before deploying the webjob onto Azure, we should check the “webjob-publish-settings” file which is part of the project. Basically, we should adjust the file in order to instruct the server to run the webjob continuously. Here is an example:

{

"$schema": "http://schemastore.org/schemas/json/webjob-publish-settings.json",

"webJobName": "AzureVenezianoWebJob",

"startTime": null,

"endTime": null,

"jobRecurrenceFrequency": null,

"interval": null,

"runMode": "Continuous"

}

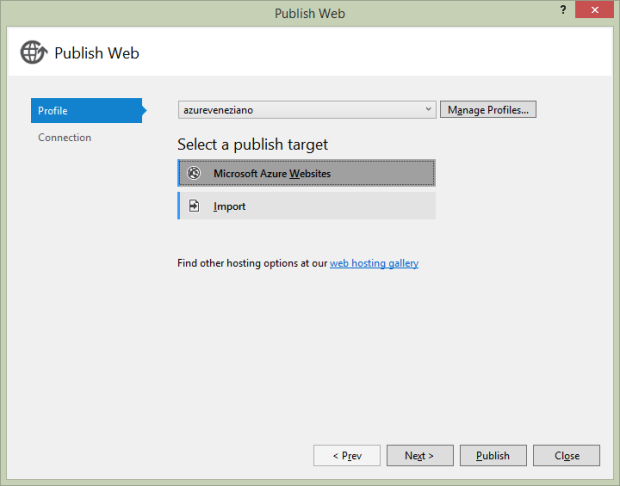

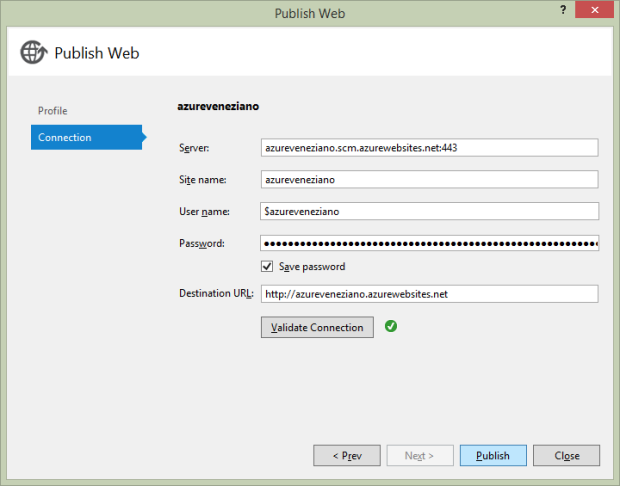

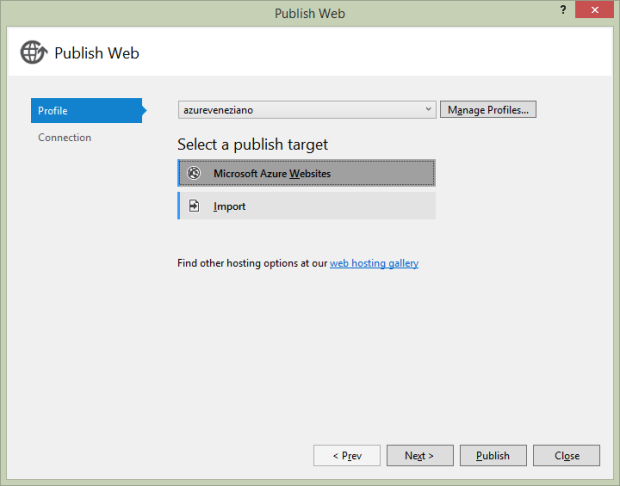

Open the project’s contextual menu, and choose the “Publish as Azure Webjob” item. A wizard like this one will open:

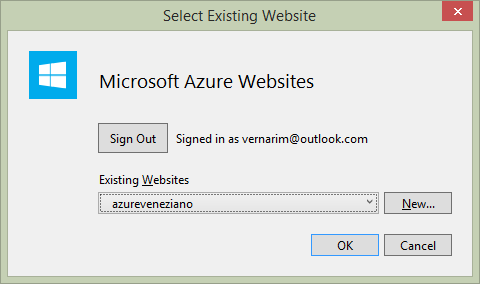

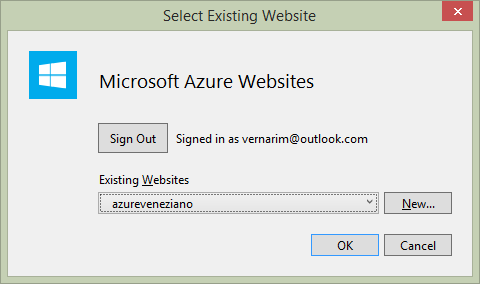

We should specify the target web-site from this dialog:

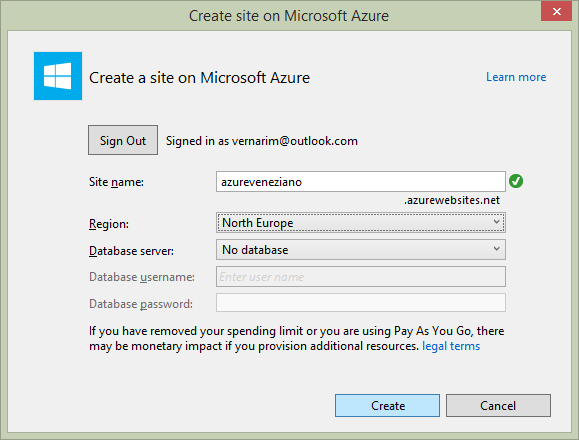

If the web-site is not existent yet, we should create a new one:

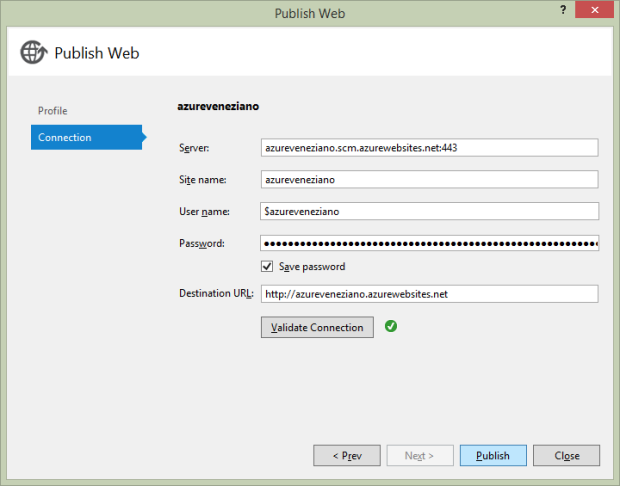

When everything has been collected for the deployment, we can validate the connection, then proceed to the publication.

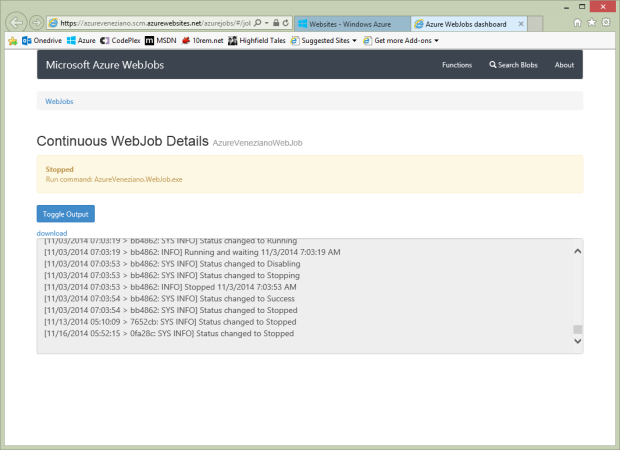

Once the webjob has been published, it should placed to run immediately. To test whether the shut down will happen gracefully, simply leave the system as is, and go to take a cup of coffee. After 20-30 minutes, you can check what really happened in the webjob’s log.

Please, note that it’s important that you leave any webjobs’ status page of the Azure portal during the test. It would hold alive the service without really shutting it down.

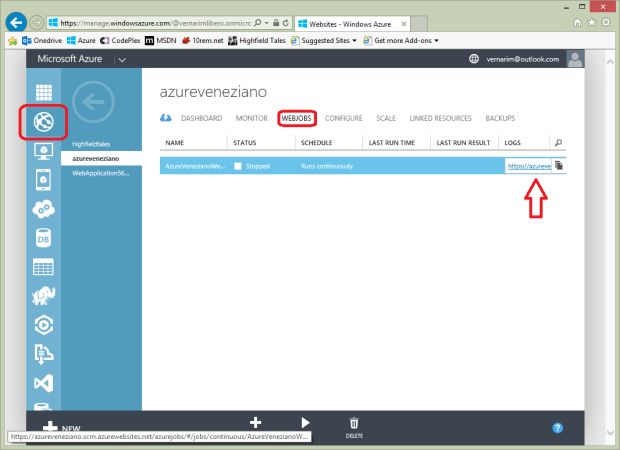

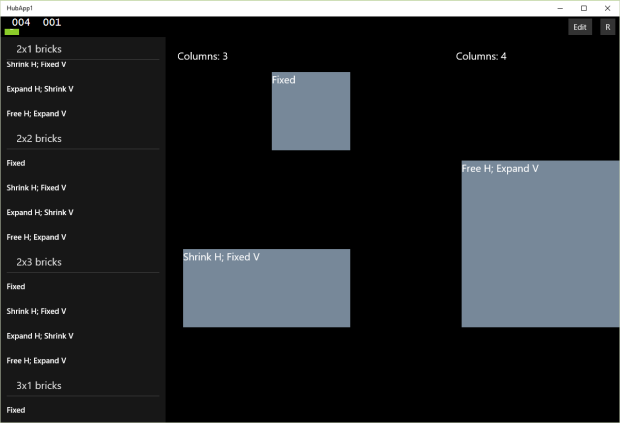

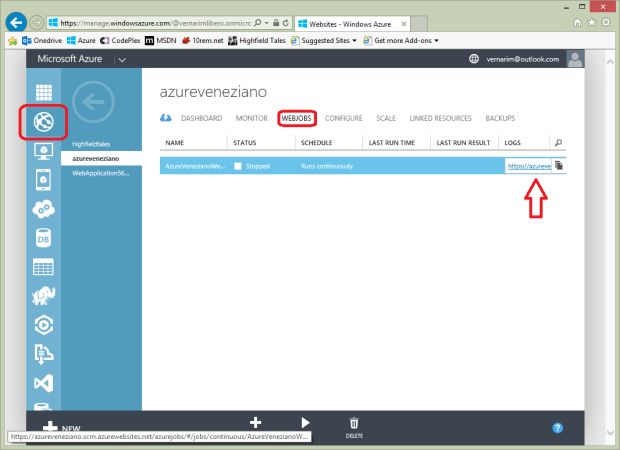

Enter in the “websites” category, then in the “Webjobs” section:

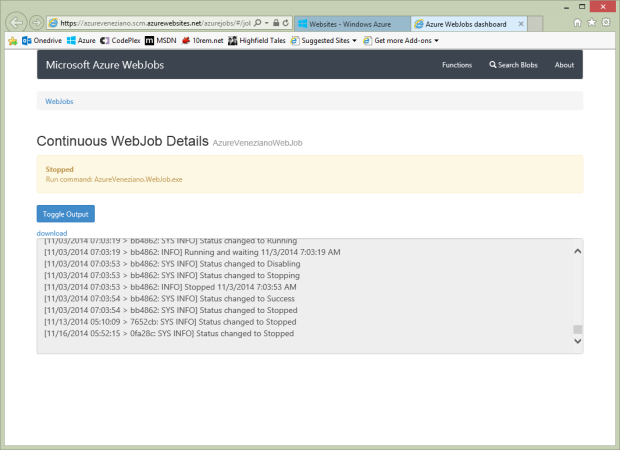

At this point you should see the status as “running” or being changing to. Click the link below the “LOGS” column, and a special page will open:

This mini-portal is a really nice diagnostic tool for the webjobs. You should able to trace both explicit “Console” logs and also exceptions. To reveal the proper flow of the webjob, you should check the timestamps, as well as the messages such as:

[11/03/2014 07:03:53 > bb4862: INFO] Stopped 11/3/2014 7:03:53 AM

The mail alert application.

Most of the material inherent to this article has been shown. However, I just would to close this part with a “concrete” sign of what the project should do. On the next article I’ll focus almost entirely on the webjob code, where the system could considered finished (many things will follow, though).

As described above, as soon a message from any device calls the API, the webjob is waken up (in case is stopped), and the data are pushed in the database.

The webjob task should pick those data out, and detect what is changed. However, the API and the webjob execution are almost asynchronous each other, so it’s better to leave the webjob running and polling for other “news”. On the other hands, when something changes by a remote point, it might be possible that something else will change too in a short time. This is another reason for leaving the webjob running until the platform shuts it down.

I don’t want to dig into details here: this will be argument for the next article. The only important thing is how the data are read periodically (about 10 seconds here) from the server. The data read are copied in a local in-memory model, for ease of interaction with the language.

At the end of each poll, the variable which are changed since the previous poll are marked with the corresponding flag. Immediately after, the program flow yields the execution of a custom logic, that is what the system should do upon a certain status.

private const string connectionString =

"Server=tcp:(your-sqlserver-name).database.windows.net,1433;" +

"Database=highfieldtales;" +

"User ID=(your-sqlserver-username);" +

"Password=(your-sqlserver-password);" +

"Trusted_Connection=False;" +

"Encrypt=True;" +

"Connection Timeout=30;";

static void Main()

{

// ...

//create and open the connection in a using block. This

//ensures that all resources will be closed and disposed

//when the code exits.

using (var connection = new SqlConnection(connectionString))

{

//create the Command object

var command = new SqlCommand(

"SELECT * FROM highfieldtales.tsensors WHERE __deleted = 0",

connection

);

//open the connection in a try/catch block.

//create and execute the DataReader, writing the result

//set to the console window.

try

{

connection.Open();

//run as long as we didn't get a shutdown notification

int jobTimer = 0;

while (isRunning)

{

if (++jobTimer > 10)

{

jobTimer = 0;

//extract all the variables from the DB table

using (SqlDataReader reader = command.ExecuteReader())

{

while (reader.Read())

{

/**

* update the local in-memory model with the

* data read from the SQL database

*/

}

}

//detect the most recent update timestamp as the new reference

foreach (LogicVar lvar in MachineStatus.Instance.Variables.Values)

{

if (lvar.LastUpdate > machine.LastUpdate)

{

machine.LastUpdate = lvar.LastUpdate;

}

}

//invoke the custom logic

logic.Run();

}

Thread.Sleep(1000);

}

}

catch (Exception ex)

{

Console.WriteLine(ex.Message);

}

}

// ...

}

Let’s say that this piece of code is “fixed”. Regardless what the system should react upon the status, this section will be always the same. For this reason there’s a special, well-defined area where we could write our own business logic.

Here is a very simple example:

class CustomLogic

: ICustomLogic

{

public void Run()

{

LogicVar analog0 = MachineStatus.Instance.Variables["Analog0"];

LogicVar analog1 = MachineStatus.Instance.Variables["Analog1"];

if ((analog0.IsChanged || analog1.IsChanged) &&

(double)analog0.Value > (double)analog1.Value

)

{

var mail = new MailMessage();

mail.To.Add("vernarim@outlook.com");

mail.Body = "The value of Analog0 is greater than Analog1.";

MachineStatus.Instance.SendMail(mail);

}

}

}

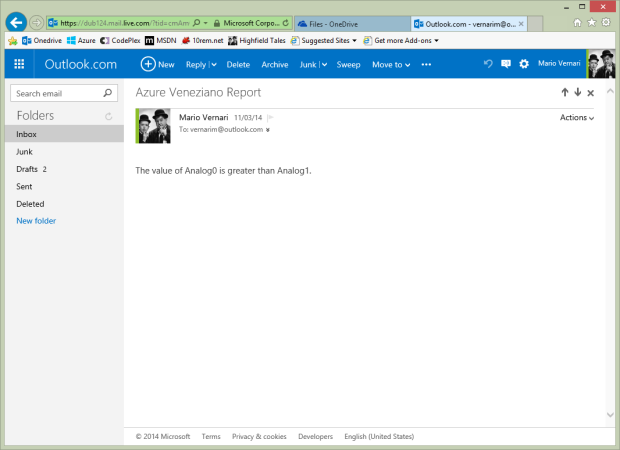

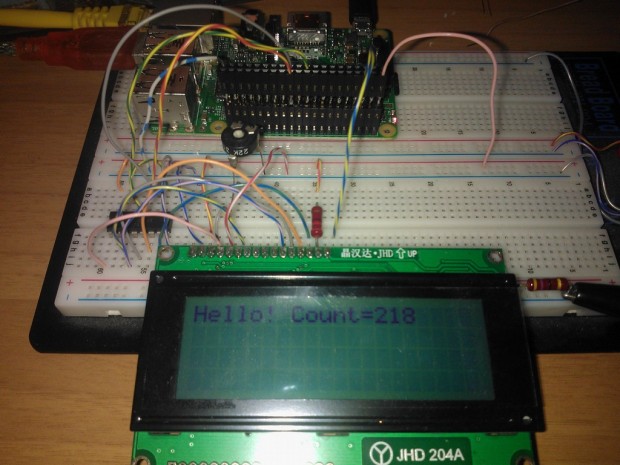

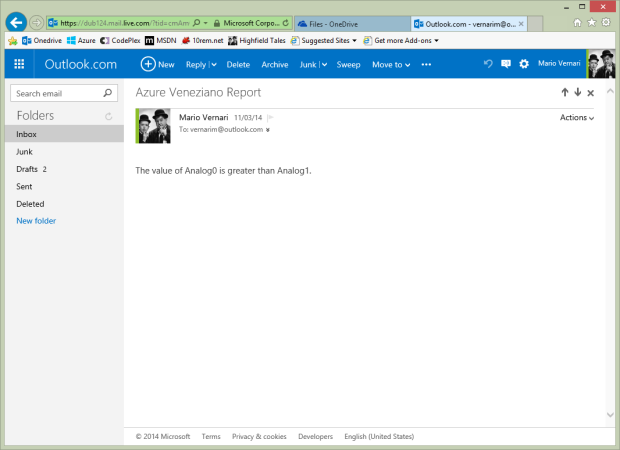

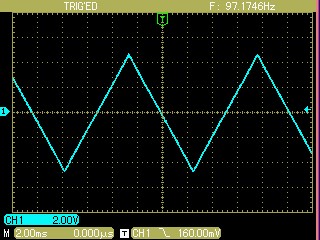

If you remember, the “Analog0” and “Analog1” are two variables sent by the Netduino. When I turn the trimpots so that:

- any of the two variables is detected as changed, and…

- the “Analog0” value becomes greater than the “Analog1” value…

…then an e-mail message is created and sent to me…(!)

Here is what I see on my mailbox:

Conclusions.

This article looks long, but it isn’t actually so: there are a lot of picture because the Azure setup walkthrough.

Azure experts may say that a more straightforward solution would be using a Message-Hub instead of a tricky way to trigger a webjob. Well, yes and no. I didn’t find a way to “peek” what’s inside a queue without removing its content, as long as other problems to solve.

This is much more an experimental project built on the Azure “sandbox”, than a definitive optimal way to structure a telemetry system. However, I believe that’s a very good point to start, take practice, then refine your own project.

In the next article, I’ll show how to create a better (yet useful) mail alerting component.

If you don’t have problems, it means that you are doing nothing new. In my job, I do have problems almost everyday, and that’s making me happy!

If you don’t have problems, it means that you are doing nothing new. In my job, I do have problems almost everyday, and that’s making me happy!