This is the the first part of a series, where I’ll present a telemetry project as a classic “Internet of Things” (IoT) showcase. The project starts as very basic, but it’ll grow up in the next parts by adding several useful components.

The central-role is for Microsoft Azure, but other sections will space over several technologies.

The source of the project is hosted in the azure-veneziano GitHub repository.

Inspiration.

This project was born as a sandbox for digging into cloud technologies, which may applies to our control-systems. I wanted to walk almost every single corner of a real control-system (kinda SCADA, if you like), for understanding benefits and limitations of a full-centralized solution.

By the way, I was also inspired by my friend Laurent Ellerbach, who published a very well-written article on how to create your own garden sprinkler system. Overall, I loved the mixture of different components which can be “glued” (a.k.a. interconnected) together: it seems that we’re facing a milestone, where the flexibility offered by those technologies are greater than our fantasy.

At the time of writing, Laurent is translating his article from French to English, so I’m waiting for the new link. In the meantime, here’s an equivalent presentation who held in Kiev, Ukraine, not long ago.

UPDATE: the Laurent’s article is now available here.

Why the name “Azure Veneziano”?

If any of you had the chance to visit my city, probably also saw in action some of the famous glass-makers of Murano. The “Blu Veneziano” is a particular tone of blue, which is often used for the glass.

I just wanted to honor Venezia, but also mention the “color” of the framework used, hence the name!

The system structure.

The system is structured as a producer-consumer, where:

- the data producer is one (or more) “mobile devices”, which sample and sometime collect data from sensors;

- the data broker, storage and business layer are deployed on Azure, where the main logic works;

- the data consumers are both the logic and the final user (myself in this case), who monitor the system.

In this introductory article I’ll focus the first section, using a single Netduino Plus 2 board as data producer.

Netduino as the data producer.

In the IoT perspective, the Netduino plays the “Mobile device” role. Basically, it’s a subject which plays the role of a hardware-software thin-interface, so that the converted data can be sent to a server (Azure, in this case). Just think to a temperature sensor, which is wired to an ADC, and a logic gets the numeric value and sends to Azure. However, here I won’t detail a “real-sensor” system, rather a small simulation as anyone can do in minutes.

Moreover, since I introduced the project as “telemetry”, the data flow is only “outgoing” the Netduino. It means that there’s (still) no support to send “commands” to the board. Let’s stick to the simpler implementation possible.

The hardware.

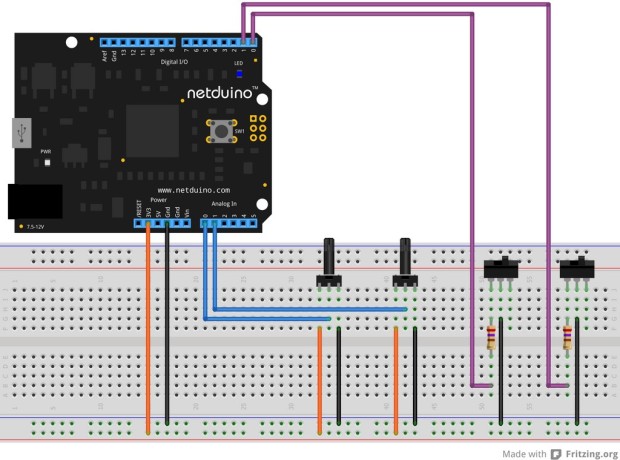

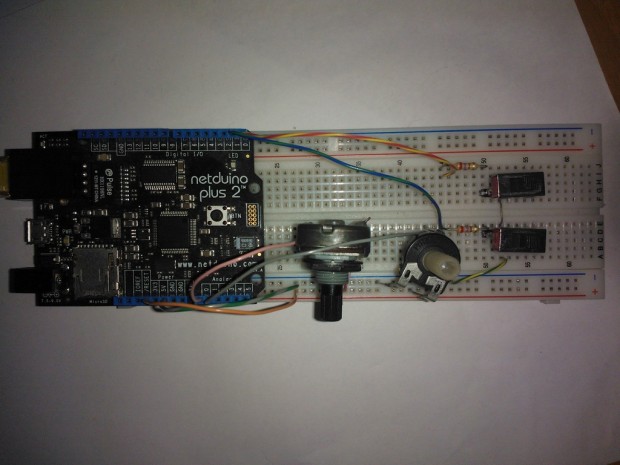

The circuit is very easy.

Two trimpots: each one provide a voltage swinging from 0.0 to 3.3 V to the respective analog input. That is, the Netduino’s internal ADC will convert the voltage as a floating-point (Double) value, which ranges from 0.0 to 100.0 (for sake of readiness, meaning it as it were a percent).

There are also two toggle-switches. Each one is connected to a discrete (Boolean) input, which should be configured with an internal pull-up. When the switch is open, the pull-up resistor takes the input value to the “high” level (true). When the switch is closed to the ground, it takes the value to the “low” level, being its resistance lower than the pull-up. and two switches.

If you notice, there’s a low-value resistor in series to each switch: I used a 270 Ohms-valued, but it’s not critical at all. The purpose is just to protect the Netduino input from mistakes. Just imagine a wrong setting of the pin actually configured as an output: what if the output would set the high-level when the switch is closed to the ground? Probably the output won’t fry, but the stress on that port isn’t a good thing.

All those “virtual” sensors can be seen from a programmer perspective as two Double- and two Boolean-values. The funny thing is that I can modify their value with my fingers!

Again, no matter here what could be the real sensor. I’d like to overhaul the hardware section for those who don’t like/understand so much about electronics. There are many ready-to-use modules/shields to connect, which avoid (or minimize) the chance to deal with the hardware.

Some virtual ports and my…laziness.

Believe me, I’m lazy.

Despite I’m having a lot of fun by playing with those hardware/software things, I really don’t like to stay spinning all the time the trimpots or sliding the switches, but I need some data changing overtime. So, I created a kind of (software) virtual port.

This port will be detailed below, and its task is to mimic a “real” hardware port. From the data production perspective it’s not different from the real ports, but way easier to manage, especially in a testing/demo session.

This concept of the “virtual port” is very common even in the high-end systems. Just think to a diagnostic section of the device, which collects data from non-physical sources (e.g. memory usage, cpu usage, etc)

The software.

Since the goal is posting on a server the data read by the Netduino, we should carefully choose the best way to do it.

The simplest way to connect a Netduino Plus 2 to the rest of the world is using the Ethernet cable. That’s fine, at least for the prototype, because the goal is reach the Internet.

About the protocol, among the several protocols available to exchange data with Azure, I think the simplest yet well-known approach is using HTTP. Also bear in mind that there’s no any “special” protocol in the current Netduino/.Net Micro Framework implementation.

The software running in the board is very simple. It can be structured as follows:

- the main application, as the primary logic of the device;

- some hardware port wrappers as data-capturing helpers;

- a HTTP-client optimized for Azure-mobile data exchange;

- a JSON DOM with serialization/deserialization capabilities;

The data transfer is normal HTTP. As the time of writing, the .Net Micro-Framework still did not offer any HTTPS support, so the data are flowing unsecured.

The first part of the main application is about the ports definition. It’s not particularly different than the classic declaration, but the ports are “wrapped” with a custom piece of code.

/**

* Hardware input ports definition

**/

private static InputPortWrapper _switch0 = new InputPortWrapper(

"Switch0",

Pins.GPIO_PIN_D0

);

private static InputPortWrapper _switch1 = new InputPortWrapper(

"Switch1",

Pins.GPIO_PIN_D1

);

private static AnalogInputWrapper _analog0 = new AnalogInputWrapper(

"Analog0",

AnalogChannels.ANALOG_PIN_A0,

100.0,

0.0

);

private static AnalogInputWrapper _analog1 = new AnalogInputWrapper(

"Analog1",

AnalogChannels.ANALOG_PIN_A1,

100.0,

0.0

);

The port wrappers.

The aims of the port wrappers are double:

- yield a better abstraction over a generic input port;

- manage the “has-changed” flag, especially for non-discrete values as the analogs.

Let’s have a peek at the AnalogInputWrapper class, for instance:

/// <summary>

/// Wrapper around the standard <see cref="Microsoft.SPOT.Hardware.AnalogInput"/>

/// </summary>

public class AnalogInputWrapper

: AnalogInput, IInputDouble

{

public AnalogInputWrapper(

string name,

Cpu.AnalogChannel channel,

double scale,

double offset,

double normalizedTolerance = 0.05

)

: base(channel, scale, offset, 12)

{

this.Name = name;

//precalculate the absolute variation window

//around the reference (old) sampled value

this._absoluteToleranceDelta = scale * normalizedTolerance;

}

private double _oldValue = double.NegativeInfinity; //undefined

private double _absoluteToleranceDelta;

public string Name { get; private set; }

public double Value { get; private set; }

public bool HasChanged { get; private set; }

public bool Sample()

{

this.Value = this.Read();

//detect the variation

bool hasChanged =

this.Value < (this._oldValue - this._absoluteToleranceDelta) ||

this.Value > (this._oldValue + this._absoluteToleranceDelta);

if (hasChanged)

{

//update the reference (old) value

this._oldValue = this.Value;

}

return (this.HasChanged = hasChanged);

}

// ...

}

The class derives from the original AnalogInput port, but exposes the “Sample” method to capture the ADC value (Read method). The purpose is similar to a classic Sample-and-Hold structure, but there is a compare algorithm which detect the new value’s variation.

Basically, a “tolerance” parameter (normalized) has to be defined for the port (default is 5%). When a new sample is performed, its value is compared in reference to the “old value”, plus the tolerance-window around the old-value itself. When the new value falls outside the window, the official port’s value is marked as “changed”, and the old-value replaced with the new one.

This trick is very useful, because allows to avoid useless (and false) changes of the value. Even a little noise on the power rail can produce a small instability over the ADC nominal sampled value. However, we need just a “concrete” variation.

The above class implements the IInputDouble interface as well. This interface comes also from another, more abstract interface.

/// <summary>

/// Double-valued input port specialization

/// </summary>

public interface IInputDouble

: IInput

{

/// <summary>

/// The sampled input port value

/// </summary>

double Value { get; }

}

/// <summary>

/// Generic input port abstraction

/// </summary>

public interface IInput

{

/// <summary>

/// Friendly name of the port

/// </summary>

string Name { get; }

/// <summary>

/// Indicate whether the port value has changed

/// </summary>

bool HasChanged { get; }

/// <summary>

/// Perform the port sampling

/// </summary>

/// <returns></returns>

bool Sample();

/// <summary>

/// Append to the container an object made up

/// with the input port status

/// </summary>

/// <param name="container"></param>

void Serialize(JArray container);

}

Those interfaces yield a better abstraction over the different kinds of port: AnalogInput, InputPort and RampGenerator.

The RampGenerator as virtual port.

As mentioned earlier, there’s a “false-wrapper” because it does NOT wrap any port, but it WORKS as it were a standard port. The benefit become from the interfaces abstraction.

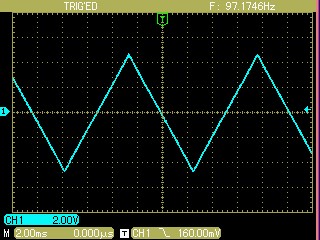

In order to PRODUCE data overtime for the demo, I wanted something automatic but also “well-known”. I may have used a random-number generator, but…how to detect an error or a wrong sequence over a random stream of numbers? Better to rely on a perfectly shaped wave, being periodic, so I can easily check the correct order of the samples on the server, but any missing/multiple datum as well.

As a periodic signal you can choose whatever you want. A sine is maybe the most famous periodic wave, but the goal is testing the system, not having something nice to see. A simple “triangle-wave” generator, is just a linear ramp rising-then-falling, indefinitely.

/// <summary>

/// Virtual input port simulating a triangle waveform

/// </summary>

public class RampGenerator

: IInputInt32

{

public RampGenerator(

string name,

int period,

int scale,

int offset

)

{

this.Name = name;

this.Period = period;

this.Scale = scale;

this.Offset = offset;

//the wave being subdivided in 40 slices

this._stepPeriod = this.Period / 40;

//vertical direction: 1=rise; -1=fall

this._rawDirection = 1;

}

// ...

public bool Sample()

{

bool hasChanged = false;

if (++this._stepTimer <= 0)

{

//very first sampling

this.Value = this.Offset;

hasChanged = true;

}

else if (this._stepTimer >= this._stepPeriod)

{

if (this._rawValue >= 10)

{

//hit the upper edge, then begin to fall

this._rawValue = 10;

this._rawDirection = -1;

}

else if (this._rawValue <= -10)

{

//hit the lower edge, then begin to rise

this._rawValue = -10;

this._rawDirection = 1;

}

this._rawValue += this._rawDirection;

this.Value = this.Offset + (int)(this.Scale * (this._rawValue / 10.0));

hasChanged = true;

this._stepTimer = 0;

}

return (this.HasChanged = hasChanged);

}

// ...

}

Here is how a triangle-wave looks in a scope (it’s a 100 Hz, just to give an idea).

Of course, I may have used a normal bench wave-generator as a physical signal source, as in the snapshot right above. That would have been more realistic, but the expected wave period would have been too short (i.e. too fast) and the “changes” with consequent message upload too frequent. A software-based signal generator is well suited for very-long periods, like many minutes.

The HTTP client.

As described above, the data are sent to the server via normal (unsecured) HTTP. The Netduino Plus 2 does not offer any HTTP client, but some primitives which help to create your own.

Without digging much into, the client is rather simple. If you know how a basic HTTP transaction works, then you’ll have no difficulty to understand what the code does.

/// <summary>

/// HTTP Azure-mobile service client

/// </summary>

public class MobileServiceClient

{

public const string Read = "GET";

public const string Create = "POST";

public const string Update = "PATCH";

// ...

/// <summary>

/// Create a new client for HTTP Azure-mobile servicing

/// </summary>

/// <param name="serviceName">The name of the target service</param>

/// <param name="applicationId">The application ID</param>

/// <param name="masterKey">The access secret-key</param>

public MobileServiceClient(

string serviceName,

string applicationId,

string masterKey

)

{

this.ServiceName = serviceName;

this.ApplicationId = applicationId;

this.MasterKey = masterKey;

this._baseUri = "http://" + this.ServiceName + ".azure-mobile.net/";

}

// ..

private JToken OperateCore(

Uri uri,

string method,

JToken data

)

{

//create a HTTP request

using (var request = (HttpWebRequest)WebRequest.Create(uri))

{

//set-up headers

var headers = new WebHeaderCollection();

headers.Add("X-ZUMO-APPLICATION", this.ApplicationId);

headers.Add("X-ZUMO-MASTER", this.MasterKey);

request.Method = method;

request.Headers = headers;

request.Accept = JsonMimeType;

if (data != null)

{

//serialize the data to upload

string serialization = JsonHelpers.Serialize(data);

byte[] byteData = Encoding.UTF8.GetBytes(serialization);

request.ContentLength = byteData.Length;

request.ContentType = JsonMimeType;

request.UserAgent = "Micro Framework";

//Debug.Print(serialization);

using (Stream postStream = request.GetRequestStream())

{

postStream.Write(

byteData,

0,

byteData.Length

);

}

}

//wait for the response

using (var response = (HttpWebResponse)request.GetResponse())

using (var stream = response.GetResponseStream())

using (var reader = new StreamReader(stream))

{

//deserialize the received data

return JsonHelpers.Parse(

reader.ReadToEnd()

);

};

}

}

}

The above code derived from an old project, but here are actually just few lines of code of that release. However, I want to mention the source for who’s interested in.

As the Azure Mobile Services offer, there are two kind of APIs which can be called: table- (Database) and custom-API-operations. Again, I’ll detail those features on the next article.

The key-role is for the OperateCore method, which is a private entry-point for both the table- and the custom-API-requests. All Azure needs is some special HTTP-headers, which should contain the identification keys for gaining access to the platform.

The request’s content is just a JSON document, that is simple plain-text.

The main application.

When the program starts, first creates an instance of the Azure Mobile HTTP-Client (Zumo), then wraps all the port references within an array, for ease of management.

Notice that there are also two “special” ports called “RampGenerator”. In this demo there are two wave-generators with a period of 1200 and 1800 seconds, respectively. Their ranges are also slightly different, but just for less confusion in the data verification.

The ability to fit all the ports in a single array, then treat them as they were an unique entity is the benefit offered by the interfaces abstraction.

public static void Main()

{

//istantiate a new Azure-mobile service client

var ms = new MobileServiceClient(

"(your service name)",

applicationId: "(your application-id)",

masterKey: "(your master key)"

);

//collect all the input ports as an array

var inputPorts = new IInput[]

{

_switch0,

_switch1,

new RampGenerator("Ramp20min", 1200, 100, 0),

new RampGenerator("Ramp30min", 1800, 150, 50),

_analog0,

_analog1,

};

After the initialization, the program runs in a loop forever, and about every second all the ports are sampled. Upon any “concrete” variation, a JSON message is wrapped up with the new values, then sent to the server.

//loops forever

while (true)

{

bool hasChanged = false;

//perform the logic sampling for every port of the array

for (int i = 0; i < inputPorts.Length; i++)

{

if (inputPorts[i].Sample())

{

hasChanged = true;

}

}

if (hasChanged)

{

//something has changed, so wrap up the data transaction

var jobj = new JObject();

jobj["devId"] = "01234567";

jobj["ver"] = 987654321;

var jdata = new JArray();

jobj["data"] = jdata;

//append only the port data which have been changed

for (int i = 0; i < inputPorts.Length; i++)

{

IInput port;

if ((port = inputPorts[i]).HasChanged)

{

port.Serialize(jdata);

}

}

//execute the query against the server

ms.ApiOperation(

"myapi",

MobileServiceClient.Create,

jobj

);

}

//invert the led status

_led.Write(

_led.Read() == false

);

//take a rest...

Thread.Sleep(1000);

}

The composition of the JSON message is maybe the simplest part, because the Linq-way of my Micro-JSON library.

The led toggling is just a visual heartbeat-monitor.

The message schema.

In my mind, there should be more than just a single board. Better: a more realistic system should connect several devices, even different from each other. Then, each device should provide its own data, and all the data incoming into the server would compose a big-bunch of “variables”.

For this reason, it’s important to distinguish the data originating source, and a kind of “device-identification”, unique in the system, is included in every message.

Moreover, I’d think that the set of variables exposed by a device could be changed any time. For example, I may add some new sensors, re-arrange the input ports, or even adjust some data type. All that means the “configuration is changed”, and the server should be informed about that. That’s because there’s a “version-identification” as well.

Then are the real sensors data. It’s just an array of Javascript objects, each one providing the port (sensor) name and its value.

However, the array will include only the port marked as “changed”. This trick yields at least two advantages:

- the message length carries only the useful data;

- the approach is rather “loose-coupled”: the server synchronizes automatically.

Each variable serialization is accomplished by the relative method declared in the IInput interface. Here is an example for the analog port:

public void Serialize(JArray container)

{

var jsens = new JObject();

jsens["name"] = this.Name;

jsens["value"] = this.Value;

container.Add(jsens);

}

Here is the initial message, which always carries all the values:

{

"devId": "01234567",

"ver": 987654321,

"data": [

{

"name": "Switch0",

"value": true

},

{

"name": "Switch1",

"value": true

},

{

"name": "Ramp20min",

"value": 0

},

{

"name": "Ramp30min",

"value": 50

},

{

"name": "Analog0",

"value": 0.073260073260073

},

{

"name": "Analog1",

"value": 45.079365079365

}

]

}

After that, we can adjust the trimpots and the switches in order to produce a “change”. Upon any of the detected changes, a message is composed and issued:

| Single change | Multiple changes |

|---|---|

{

"devId": "01234567",

"ver": 987654321,

"data": [

{

"name": "Analog1",

"value": 52.503052503053

}

]

}

|

{

"devId": "01234567",

"ver": 987654321,

"data": [

{

"name": "Switch1",

"value": false

},

{

"name": "Analog1",

"value": 75.946275946276

}

]

}

|

Conclusions.

It’s easy to realize that this project is very basic, and there are many sections that could be improved. For example, there’s no any rescue of the program when an exception is thrown. However, I wanted to keep the application at a very introductory level.

It’s time to wire your own prototype, because in the next article we’ll see how to set-up the Azure platform for the data elaboration.

2 thoughts on “Azure Veneziano – Part 1”